SQL Server is a powerful relational database management system that has been designed to handle large amounts of data. In today’s data-driven world, organizations are constantly dealing with vast volumes of data that need to be stored, managed, and analyzed efficiently. Setting up Windows and SQL Server to handle large data volumes requires a combination of expert knowledge and best practices to ensure optimal performance and scalability. In this article, we will discuss some expert tips and best practices for setting up Windows and SQL Server to handle large data volumes, so that you can get the most out of your system and ensure that your data is secure and easily accessible.

Expert Tips

Things that need to be considered:

- SQL File Locations: When dealing with large amounts of data, it is important to separate the data files from the log files and the system files. This will help to ensure that the data files have sufficient space and that the log files do not fill up, which can cause performance issues. It is also a best practice to store the data files on a separate volume or set of volumes to ensure that there is enough space for the data files to grow.

- CPU and RAM: It is important to have sufficient CPU and RAM to handle the workload. It is recommended to have at least 8-16 cores and 64-128 GB of RAM for a large data workload. Additionally, it is recommended to use a high-performance CPU, such as Intel Xeon or AMD EPYC, to handle the workload.

- Type of Volumes: Use the appropriate type of volume. For example, using a RAID 5 or RAID 6 configuration for the data files can provide better performance and fault tolerance than using a RAID 1 configuration. Additionally, it is recommended to use SSDs for the log files to ensure that they can be written quickly.

- Network: When dealing with large amounts of data, it is important to have a fast and reliable network. This can include using multiple NICs, such as 10 GbE or InfiniBand, to ensure that there is enough bandwidth to transfer the data. Additionally, it is recommended to use a dedicated network for the SQL Server to ensure that the data is not impacted by other network traffic.

- Backup and Recovery: Regularly backing up and testing recovery methods for your large data sets is critical to ensure data availability and integrity. Consider implementing backup strategies like Database Snapshot, log backups, full backups, and the automated backup schedule. Additionally, testing and practicing recovery methods such as point-in-time recovery, disaster recovery, and high availability options like Always On availability groups.

By following these best practices, you can ensure that your Windows Server and SQL Server are properly configured to handle large amounts of data and that the data is secure, available, and recoverable.

CPU Performance Optimization for SQL Server

CPU optimization is crucial for SQL Server’s performance. Learn how to monitor and optimize CPU usage and allocate the appropriate number of cores.

CPU, or central processing unit, is the heart of a computer’s hardware and is responsible for executing instructions for the operating system and applications.

In the context of an SQL Server, the CPU plays a critical role in the performance of the system. SQL Server uses the CPU to process and execute queries, maintain indexes, and perform other tasks. When a query is executed, the SQL Server query optimizer generates an execution plan that specifies which operations the CPU should perform in order to return the requested data.

When there are not enough CPU resources available, SQL Server may start to experience performance issues. This can include slow query performance, increased wait times for queries, and an increased number of deadlocks.

To ensure that SQL Server has sufficient CPU resources, it is important to monitor the CPU usage on the server. The SQL Server performance monitor can be used to track CPU usage, as well as other performance metrics, such as memory and disk usage. Additionally, it is important to ensure that the server has sufficient CPU cores and that they are running at the appropriate clock speed.

In addition to monitoring the CPU usage, it is also important to optimize the queries and indexes that are used by the system. This can include rewriting poorly performing queries, creating new indexes, or modifying existing indexes. Additionally, it is important to ensure that the server is not over-allocated with other resource-intensive applications, such as virtualization.

It is also important to consider that SQL Server uses more than one type of CPU resource, such as logical and physical cores, to perform different tasks. Therefore, it is important to understand the specific workloads and usage patterns of your SQL Server environment to ensure that the appropriate number of CPU cores are allocated.

RAM Optimization for SQL Server

RAM is a critical component of an SQL Server’s performance. Discover how to monitor and optimize memory usage and configure SQL Server to use an appropriate amount of memory.

RAM, or random access memory, is a type of computer memory that is used to temporarily store data that the CPU needs to access quickly.

In the context of SQL Server, RAM plays a critical role in the performance of the system. SQL Server uses RAM to store data pages, indexes, and other structures that are needed to perform queries and other tasks. When a query is executed, SQL Server retrieves the data pages and other structures from RAM rather than reading them from disk. This can significantly improve the performance of the query.

When there is not enough RAM available, SQL Server may start to experience performance issues. This can include slow query performance, increased disk I/O, and an increased number of page faults. Additionally, when there is not enough RAM, SQL Server may start to use the page file on the disk, which can slow down the system even more.

To ensure that SQL Server has sufficient RAM, it is important to monitor the memory usage on the server. The SQL Server performance monitor can be used to track memory usage, as well as other performance metrics, such as CPU and disk usage. Additionally, it is important to ensure that the server has sufficient RAM and that the SQL Server is configured to use an appropriate amount of memory.

In addition to monitoring memory usage, it is also important to optimize the queries and indexes that are used by the system. This can include rewriting poorly performing queries, creating new indexes, or modifying existing indexes. Additionally, it is important to ensure that the server is not over-allocated with other resource-intensive applications, such as virtualization.

It’s important to note that SQL Server has a feature called “Dynamic Memory Management”, which allows the Database Engine to automatically adjust the amount of memory it uses based on the workload and available resources. This feature can help to optimize the memory usage of SQL Server, but it should be used in conjunction with monitoring and manual adjustments as needed.

Volume and HDD Type Considerations for SQL Server

Choosing the right volume and HDD type is important for setting up a SQL Server that can handle large amounts of data. Find out how to separate data, log, and system files, and choose the appropriate type of volume for different types of data.

Volume and HDD type are important factors to consider when setting up a SQL Server for handling large amounts of data.

Volume refers to the physical storage space that is allocated to the SQL Server. This can include traditional hard disk drives (HDDs) or solid-state drives (SSDs). The choice of volume type can have a significant impact on the performance of the SQL Server.

HDDs are traditional spinning disk drives that are commonly used for storage. They are less expensive than SSDs and are well-suited for storing large amounts of data. However, HDDs are slower than SSDs and can be affected by mechanical failures.

SSDs, on the other hand, are faster and more reliable than HDDs. They use flash memory to store data, which allows for faster access times and higher I/O performance. SSDs are more expensive than HDDs but are well-suited for storing frequently accessed data, such as indexes and system databases.

When setting up a SQL Server for handling large amounts of data, it is a best practice to separate the data files from the log files and the system files. This will help to ensure that the data files have sufficient space and that the log files do not fill up, which can cause performance issues. It is also a best practice to store the data files on a separate volume or set of volumes, such as a RAID 5 or RAID 6 configuration, to ensure that there is enough space for the data files to grow.

It is also important to ensure that the appropriate type of volume is used for the different types of data. For example, using SSDs for the log files and frequently accessed data can improve performance.

Optimizing tempdb for SQL Server Performance

tempdb plays a crucial role in the performance of SQL Server, particularly for handling large amounts of data. Learn how to optimize tempdb to improve SQL Server’s performance.

tempdb is a special system database in SQL Server that is used to store temporary objects such as temporary tables, temporary stored procedures, and table variables. It also holds the intermediate results of certain query operations, such as sorting and grouping, and it is used by the Database Engine to store versioning information for row versioning-based isolation levels.

When dealing with large amounts of data, tempdb can play a critical role in the performance of the system. As the amount of data in the system increases, so does the amount of temporary data that needs to be stored in tempdb. This can include large intermediate results of queries, large temporary tables, and large table variables.

Due to its importance, it is essential to ensure that tempdb is properly configured and managed to handle large amounts of data. Here are some best practices:

- Size: tempdb should be sized appropriately to handle the workload. This means that it should have enough space to store the maximum amount of temporary data that is expected to be used by the system.

- Placement: tempdb should be placed on a separate volume or set of volumes from the data files and log files to ensure that it has sufficient space to grow.

- Multiple files: tempdb should be configured with multiple data files to ensure that it can take advantage of multiple CPU cores and prevent contention.

- Autogrow: tempdb should be configured with autogrow enabled to ensure that it can grow as needed.

- Monitor: it is important to monitor the usage of tempdb, including the amount of space used, the number of active sessions, and the number of transactions to ensure that it is functioning as expected.

By following these best practices, you can ensure that tempdb is properly configured and managed to handle large amounts of data and that the performance of the system is not negatively impacted by tempdb.

Optimizing SQL Server Performance: Harnessing the Power of I/O Affinity Mask Configuration

The I/O affinity mask is a configuration option in SQL Server that allows you to specify which CPU cores are used to perform disk I/O operations.

In the context of big data, disk I/O operations can become a bottleneck in the performance of the system as the amount of data increases. When dealing with large amounts of data, it is important to ensure that the disk I/O operations are performed as efficiently as possible.

The I/O affinity mask allows you to specify which CPU cores are used to perform disk I/O operations. This can be useful in situations where the system has multiple CPU cores and you want to ensure that the disk I/O operations are performed by specific cores.

When configuring the I/O affinity mask, you can specify the mask as either a decimal or a hexadecimal value. For example, if you want to use CPU cores 0 and 1 for disk I/O operations, you would specify a mask of 3 (binary 11).

It is important to note that the I/O affinity mask should be configured in conjunction with other performance-related settings. It is also important to monitor the disk I/O performance of the system to ensure that the I/O affinity mask is configured correctly and that disk I/O is not causing performance issues.

It is also important to consider that in modern systems, there are technologies like Non-Uniform Memory Access (NUMA) that can affect the performance of disk I/O operations. So, it’s important to understand the specific hardware architecture of the server and the workloads before configuring the I/O affinity mask.

How IOPS Impacts Your Storage Device Performance and SQL Server Workload

IOPS, or Input/Output Operations Per Second, is a measure of the performance of storage devices, such as hard drives and solid-state drives (SSDs), and is used to indicate how many input and output operations a storage device can perform in one second.

In the context of big data, IOPS can play a critical role in the performance of the system. As the amount of data increases, so does the number of input and output operations that need to be performed by the storage devices. If the storage devices do not have sufficient IOPS, the system may experience performance issues such as slow query performance, increased disk I/O, and an increased number of page faults.

To ensure that the storage devices have sufficient IOPS, it is important to monitor the IOPS of the system. The SQL Server performance monitor can be used to track the IOPS, as well as other performance metrics, such as CPU and memory usage. Additionally, it is important to ensure that the storage devices are configured to handle the workload and to take into account the specific usage patterns of the SQL Server.

It is also important to consider that IOPS are affected by the type of storage device being used. For example, traditional spinning disk hard drives typically have lower IOPS than solid-state drives (SSDs) and it’s important to have the appropriate type of storage for the workload.

In addition to monitoring the IOPS, it is also important to optimize the queries and indexes that are used by the system. This can include rewriting poorly performing queries, creating new indexes, or modifying existing indexes. Additionally, it is important to ensure that the server is not over-allocated with other resource-intensive applications, such as virtualization, to ensure that the storage devices have sufficient IOPS to handle the workload.

A page file, also known as a swap file or a paging file, is a file on a hard disk drive (HDD) or solid-state drive (SSD) that the operating system uses as an extension of physical memory (RAM).

In the context of SQL Servers and big data, the page file plays a critical role in ensuring the stability and performance of the system. When the amount of data in the system increases, the amount of memory required to store it also increases, and it may exceed the amount of physical RAM available on the server. When this happens, the operating system will start to use the page file to store data that is not currently being used by the system. This can help to prevent the system from running out of memory, but it can also slow down the system as the page file is typically much slower than RAM.

To ensure that the page file is properly configured to handle large amounts of data, it is important to follow these best practices:

- Placement: the page file should be placed on a separate volume or set of volumes from the data files and log files to ensure that it has sufficient space to grow.

- Size: the page file should be sized appropriately to handle the workload. This means that it should have enough space to store the maximum amount of data that is expected to be used by the system.

- Multiple files: it is recommended to configure multiple page files to spread the I/O across multiple physical disks.

- Monitor: it is important to monitor the usage of the page file, including the amount of space used, and the number of page faults to ensure that it is functioning as expected.

It is important to note that with sufficient RAM and a well-tuned system, the use of page files should be minimal. However, in the case of big data, having a well-configured page file can help to prevent the system from running out of memory and ensure stability and performance.

Boosting SQL Server Performance with Buffer Pool Extension and NVRAM Technology

Buffer pool extension is a feature in SQL Server that allows you to extend the buffer pool memory, which is used to cache data pages and other structures, with non-volatile random access memory (NVRAM) devices, such as solid-state drives (SSDs).

In the context of big data, the buffer pool can become a bottleneck in the performance of the system as the amount of data increases. When there is not enough buffer pool memory available, SQL Server may start to experience performance issues such as slow query performance, increased disk I/O, and an increased number of page faults.

Buffer pool extension allows you to extend the buffer pool memory with NVRAM devices, such as SSDs. By using NVRAM devices, the buffer pool can take advantage of faster read and write speeds, which can significantly improve the performance of the system.

For example, let’s say you have a SQL Server with 256GB of RAM. The buffer pool is currently using 120GB of that memory. But you have a workload that requires more than 120GB of buffer pool memory. So, you decide to add a buffer pool extension with NVRAM devices that have a total of 256GB of storage. Now, the buffer pool has access to a total of 376GB of memory (120GB + 256GB), which can help to improve the performance of the system.

It is important to note that the buffer pool extension is only available in SQL Server Enterprise edition, and also it is important to monitor the buffer pool usage and performance of the system to ensure that the buffer pool extension is configured correctly and that it is providing the desired performance improvements.

It’s also important to keep in mind that, in addition to buffer pool extension, other best practices such as optimizing queries and indexes, monitoring the disk I/O, and ensuring that the server is not over-allocated with other resource-intensive applications, such as virtualization, can also help to improve the performance of the system in case of big data.

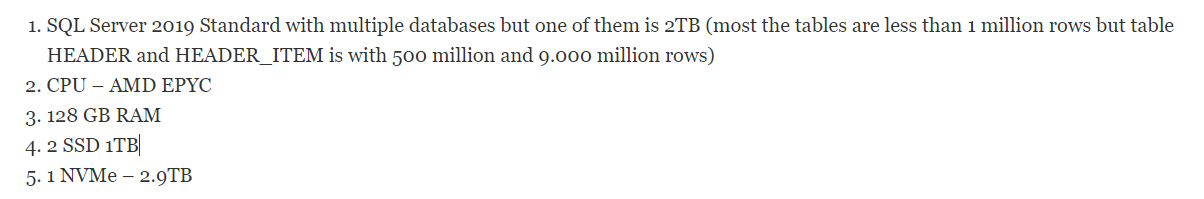

Expert advice: How I Set Up My Physical SQL Server 2019 for a Massive 2TB Database with 500 Million Rows

And now one of the setups that I had:

The crucial thing regarding this database is that there are 12 triggers in table HEADER and 16 triggers in table HEADER_ITEM and altogether there were more than 160 triggers

So, first of all, this is not VM but a physical server with Windows Server 2019. 2 NIC and 2 FC set in 2 NIC teams so there was LAN with 2GbE and LAN with 20GbE.

The mdf file is located on storage that is SSD with RAID 6. Ldf file is located on NVMe. Temp table located on one of SSD and on another we created ndf where we moved some of biggest indexes.

Need more expert advice on optimizing your SQL Server for large data workloads? Contact us and let’s discuss how we can help you boost your performance and scalability!

Resources: https://learn.microsoft.com/en-us/sql/sql-server/?view=sql-server-ver15

- Best Practices for Setting Up Windows and SQL Server to Handle Large Data Volumes: Expert Tips - February 20, 2023

- Understanding and Troubleshooting Parameter Sniffing in SQL Server - January 20, 2023